An outlier is an observation in your data set that is significantly different from the other observations. It can be rare patterns, or some kind of error in your data.

There are many reasons why you might care about them. You may want to detect rare events, banking fraud, or remove errors in your data for cleaner analysis. Outliers in data might lead to less accurate machine learning models. This business of identifying and discarding suspicious data has been going on for at least 200 years. I want to focus on outlier detection in Normally distributed data, but before we do that, lets have a brief overview of some of the general ways of tackling this problem.

General approaches

Approaches often fall into two major categories.

The first category uses techniques that essentially boil down to looking at how close (geometrically), or similar (using some notion of similarity), data points are to each other. The pariahs that are far away from the major groupings are considered suspicious outlier candidates. For Normally distributed data, you could think of using the standard deviation as a simple form of clustering analysis. People commonly pick an arbitrary amount of deviation that they consider extreme for their use case (e.g., between 2 and 3), and consider points outside that spread as suspicious.

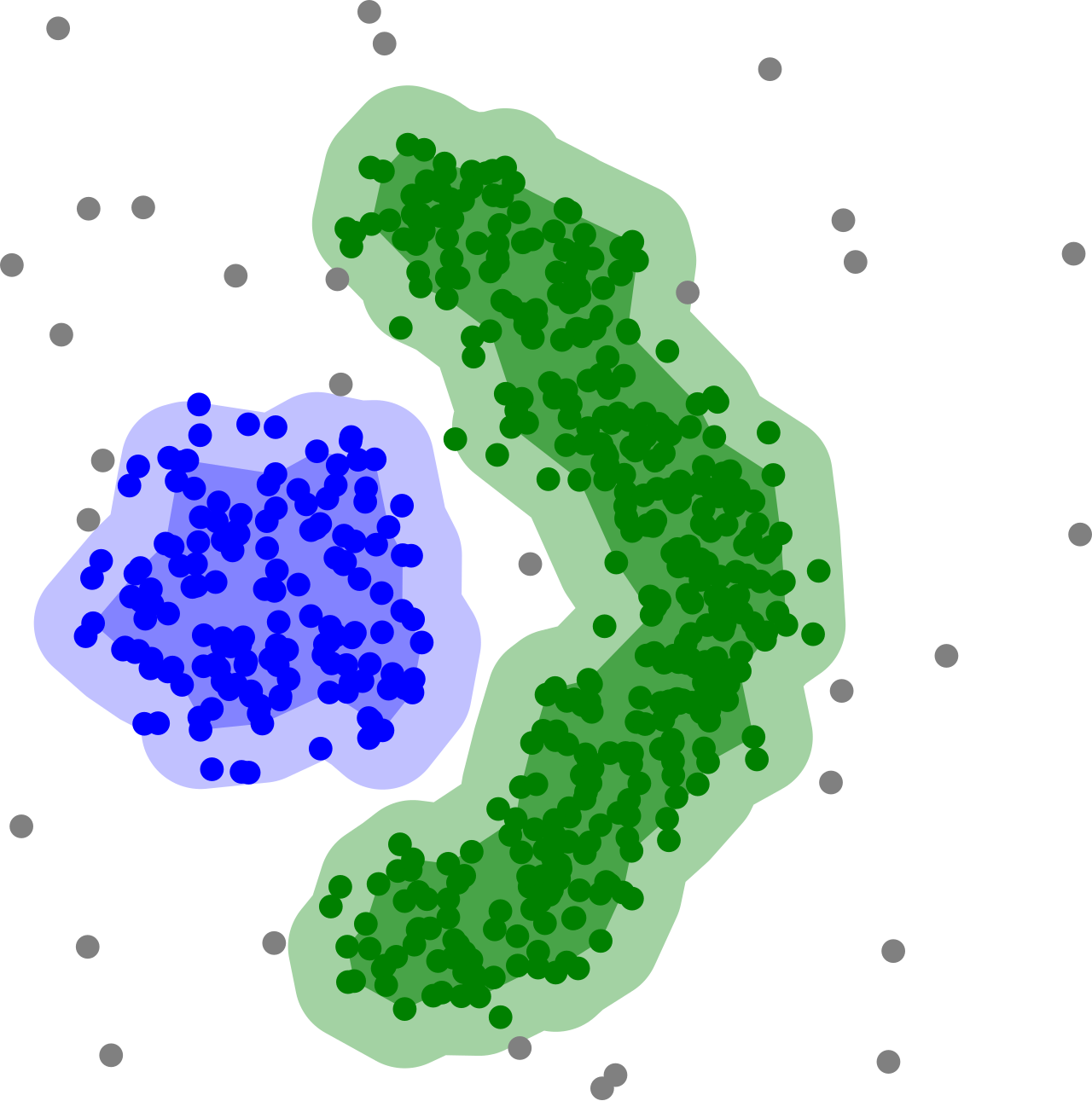

A slightly less trivial clustering technique is DBSCAN. It puts points in the same group if they are within a specified distance of another point in the group.

The second category is to fit classifier functions. This is a data driven approach (machine learning) where you use data to fit the parameters of a function that decides whether something is an outlier. Once you have fit (trained) this function, you can use it on new data points not in your training set to determine whether something is an outlier. There are a variety of different modelling approaches you can take, from simple linear classifiers, to random forests, and neural networks.

There is no perfect technique that works for all data sets, so it’s useful to experiment with different approaches. A good Python package to use to experiment with various techniques is the Python Outlier Detection module.

Outlier detection in Normally distributed data

Many real life data sets are Normally distributed. In this situation, outlier detection works reasonably well with simple statistics. I mentioned earlier that it’s common for people to look at the mean, and standard deviations for outlier detection. The motivation behind this makes sense since it’s trying to find points that deviate a lot from the average case. It has the added bonus of being a statistic that many of us are familiar with. However, outliers can have a dramatic impact on the mean and standard deviation, making the detection unusable in extreme cases.

Take for example, the data set of values \(\{1, 3, 3, 6, 8, 10, 10, 1000\}\). The mean is \(130.13\) and the standard deviation is \(328.80\). If you took the detection threshold to be 3 standard deviations, this means any value between \(-856.27\) and \(1116.52\) would be considered normal, covering the entire dataset. Most of us would probably agree that \(1000\) should have been labelled an outlier though. It is an illustration of why mean and standard deviation is not particularly robust against outliers. Statisticians formalise this idea in a concept called the breakdown point.

Breakdown point: a measure of robustness

The breakdown point of an estimator is the proportion of wrong data that can be in the data set before the estimator starts giving you terrible estimates. As an example, let’s look at the formula \(\frac{1}{n} \sum_{i=0}^n x_i\) as an estimator of the mean. This estimator has a \(0\%\) breakdown point. Why? Because for any data set you have, large errors on a single data point could ruin the estimate of the mean in a dramatic way, like in the example before. The interquartile range has a \(25\%\) breakdown point, and the median has a \(50\%\) breakdown point. Because those measures are concerned with the relative position of each data point to others, rather than the values themselves, they can tolerate some points having large errors. Statistics with a high breakdown point are resistent statistics, and robust against outliers. It gives the estimator some protection from the outliers impacting the estimations. Ideally we don’t want the outlier to impact the estimation of whether something is an outlier or not. That would be like letting a baker judge his own competition on who has the best pie.

Given medians have such nice properties, it would be desirable to use them in outlier detection instead of using means and standard deviations.

U MAD BRO?

A smart guy by the name of Carl Friedrich Gauss figured out how to do this in 1816 using a metric called the Median Absolute Deviation (MAD). This baller of a mathematician is often considered to be the most powerful mathematician to have ever lived. They once found one of his unpublished notebooks. It contained so many remarkable results, that it would have advanced mathematics by 60 years if it had been published at the time. If MAD’s good enough for Gauss, it’s good enough for us. So what is MAD?

Let \(\tilde{x}\) be the median of your data set. Then \(\text{MAD} := 1.4826 \times \text{median}\{\|x_i - \tilde{x}\|\}\). What it is essentially doing is looking at the distances of each data point from the median, then taking the median of those distances. The \(1.4826\) scaling factor is derived from a relationship between MAD and the standard deviation. The scaling factor is different for different distributions, see this.

Now that we know how MAD is defined, we can use it to flag outlier candidates in a similar way to mean and standard deviation. If you choose the threshold as 3 for example, then any data point \(x\) such that \(\tilde{x} - 3*MAD < x < \tilde{x} + 3*MAD\) would be considered ok, and anything outside that range would be considered an outlier.

Using the earlier example of \(\{1, 3, 3, 6, 8, 10, 10, 1000\}\), we calculate \(MAD=5.1891\), with a median of \(7\). This means any value \(7-3*5.1891 < x < 7+3*5.1891\) is considered ok, i.e., any \(x\) within \(-8.57 < x < 22.57\) is ok. This time, \(1000\) is picked up as a potential outlier since it falls outside that range.

Almost Normal data

Unfortunately if you have non-Normally distributed data, things can get hairy. If the data is almost Normal but possibly skewed, you could try making it more Normal-like through transformations (like a log transform) and then applying MAD, or by calculating a different scaling constant for the different distribution. However, if the non-Normality is bad enough, it might still lead to bad performance, e.g., if you have an asymmetric shape or long tails (although there are transformations for some of those types too).

In those hairy situations, you may want to look into compensating for those quirks by making the thresholds more adaptive, or go for more sophisticated machine learning techniques. Some of the links below might get you started for some ideas to try.

More information

1. Engineering statistics handbook

3. Outlier detection for skewed data

4. Double MAD outlier detector

5. Python Outlier Detection package

Banner photo by Randy Fath on Unsplash